Variational Autoencoder for Prioritising Chemistry

I developed a variational autoencoder (VAE)-based model to learn and prioritise promising combinations of chemical elements for synthesis and functional performance. By training on historical chemical data, the model captures underlying patterns and suggests novel phase fields with high potential, reducing experimental trial-and-error. The implementation leverages modern Python ML frameworks (e.g., PyTorch, TensorFlow, Keras) and is fully open-sourced to enable reproducibility and further development. View code on GitHub

G. Han, A. Vasylenko et. al, Science 383, 6684 (2024)A. Vasylenko et. al, Nature Communications 12, 5561 (2021)

Algorithms for Chemical Space Exploration

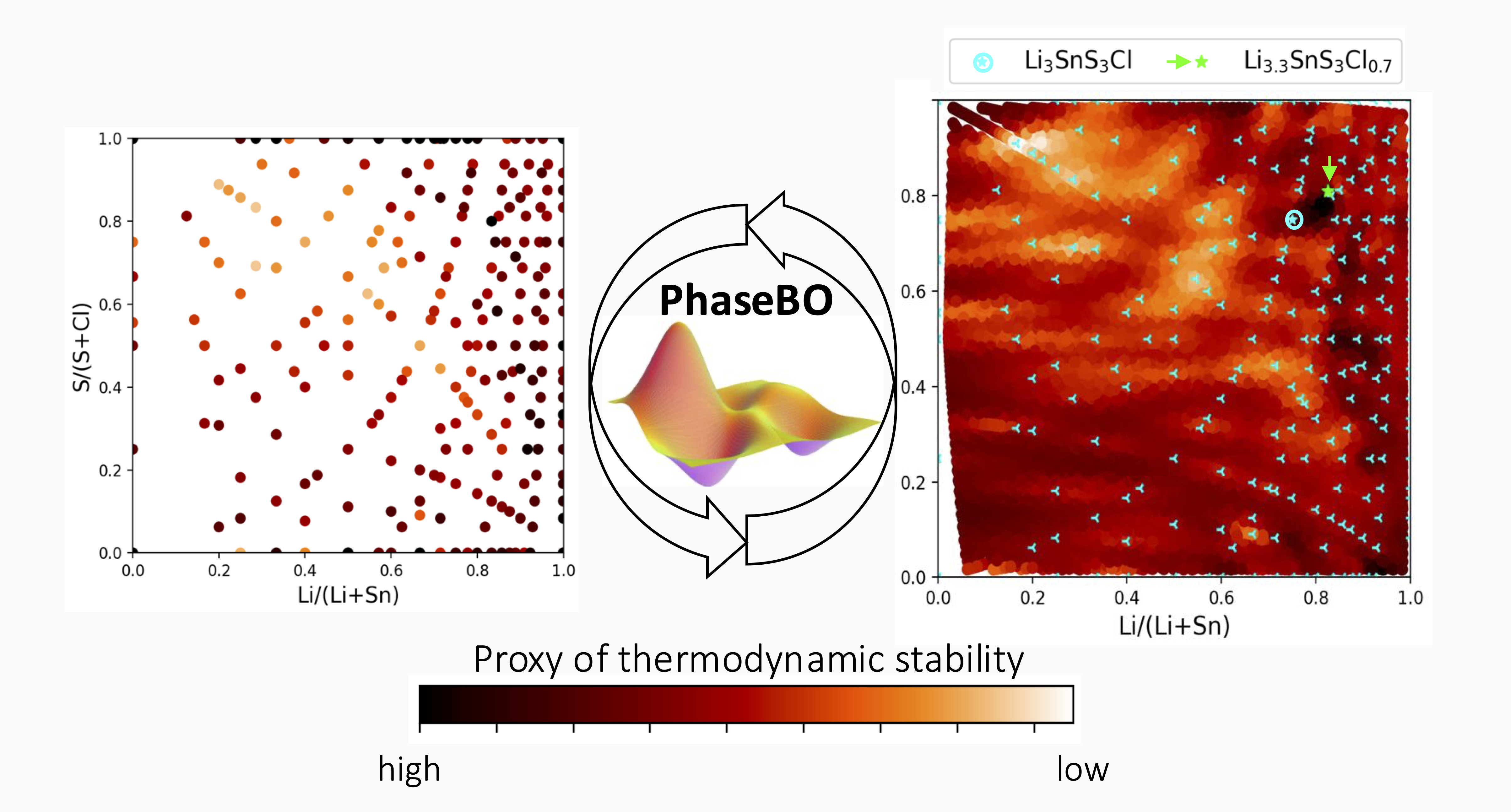

Built a Bayesian optimisation-based framework (PhaseBO) to efficiently navegate complex compositional spaces. PhaseBO leverages Expected Improvement acquisition and Thompson Sampling for batch selection, combined with uncertainty reduction strategies to balance exploration and exploitation.

Compared to conventional grid-searches, PhaseBO enables fine-grained coverage of the compositional space and achieved a 50% increase in the probability of discovering new stable compositions. The implementation is modular and open-source, designed to be extensible for other scientific discovery tasks, such as multi-optimisation or design of experiments.

View code on GitHub

Generative AI for crystal structure design

Developed PIGEN — a physics-informed diffusion method for crystal structure generation that embeds chemically grounded descriptors of compactness and local-environment diversity into training and sampling. By conditioning on these metrics, PIGEN balances structural stability and novelty, enabling generation of frameworks beyond known prototypes. The work also introduces a chemistry-aware validation workflow and demonstrates how generative models can seed and accelerate crystal structure prediction workflows. View code on GitHub

A. Vasylenko et. al., Preprint (2025)